Unveiling the Inner Workings of Deep Learning: A Comprehensive Guide to Class Activation Maps

Related Articles: Unveiling the Inner Workings of Deep Learning: A Comprehensive Guide to Class Activation Maps

Introduction

With enthusiasm, let’s navigate through the intriguing topic related to Unveiling the Inner Workings of Deep Learning: A Comprehensive Guide to Class Activation Maps. Let’s weave interesting information and offer fresh perspectives to the readers.

Table of Content

- 1 Related Articles: Unveiling the Inner Workings of Deep Learning: A Comprehensive Guide to Class Activation Maps

- 2 Introduction

- 3 Unveiling the Inner Workings of Deep Learning: A Comprehensive Guide to Class Activation Maps

- 3.1 Understanding Class Activation Maps: A Visual Explanation of Model Decisions

- 3.2 The Mechanics of Class Activation Maps: A Deeper Dive

- 3.3 Benefits of Class Activation Maps: Unveiling the Black Box

- 3.4 Types of Class Activation Maps: A Spectrum of Approaches

- 3.5 Applications of Class Activation Maps: Beyond Image Classification

- 3.6 FAQs on Class Activation Maps: Demystifying the Concept

- 3.7 Tips for Effective Class Activation Map Utilization: Maximizing Insights

- 3.8 Conclusion: Towards a More Transparent Future of Deep Learning

- 4 Closure

Unveiling the Inner Workings of Deep Learning: A Comprehensive Guide to Class Activation Maps

Deep learning models, with their remarkable ability to learn intricate patterns from vast datasets, have revolutionized numerous fields, from image recognition to natural language processing. However, understanding the reasoning behind these models’ decisions can be a complex task. This is where Class Activation Maps (CAMs) emerge as invaluable tools, offering a window into the inner workings of deep learning models, particularly in the realm of image classification.

Understanding Class Activation Maps: A Visual Explanation of Model Decisions

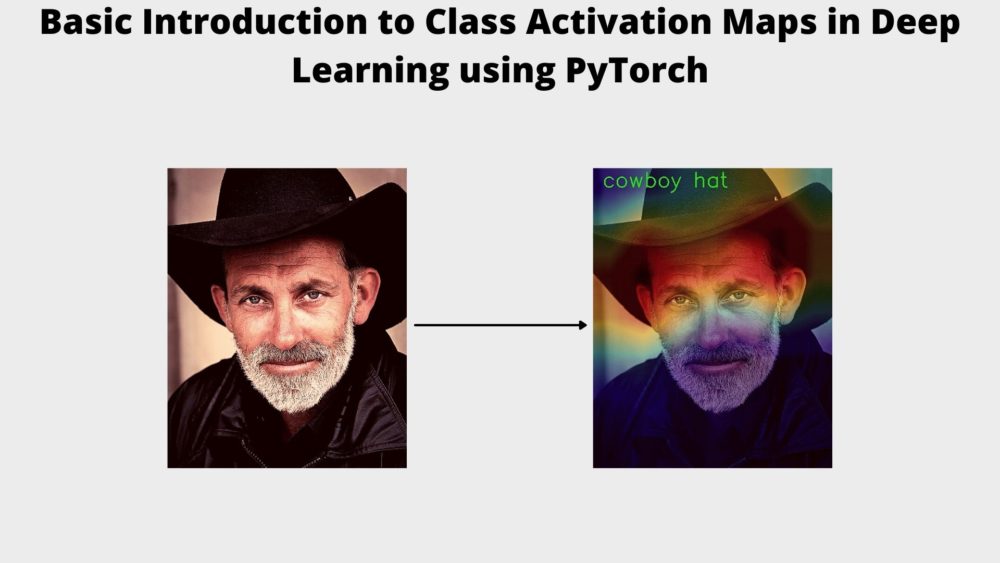

Imagine a deep learning model tasked with identifying a cat in an image. It might correctly classify the image, but how does it arrive at that conclusion? CAMs provide a visual representation of the model’s decision-making process, highlighting the specific image regions that contributed most to the classification.

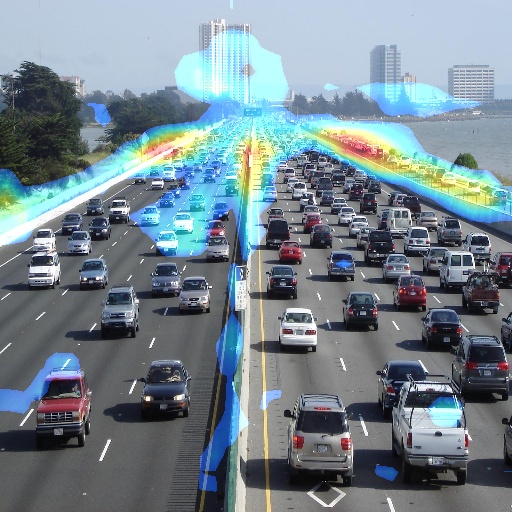

In essence, a CAM is a heatmap overlaid on the original image. The heatmap represents the activation scores of different regions in the image, with brighter areas indicating higher activation and thus greater contribution to the final classification. This visual representation allows researchers and practitioners to understand which parts of the image the model focuses on and how it arrives at its decision.

The Mechanics of Class Activation Maps: A Deeper Dive

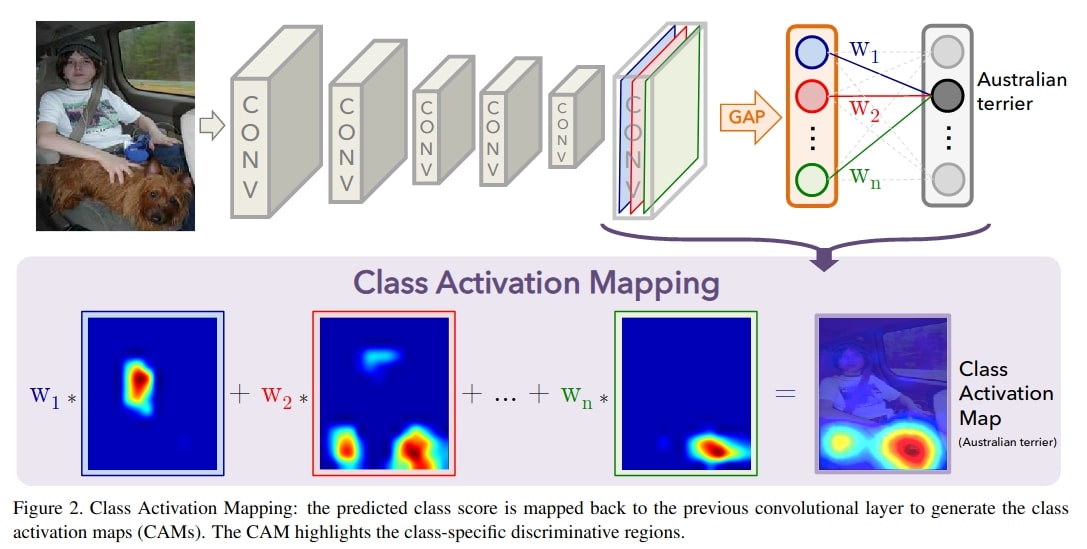

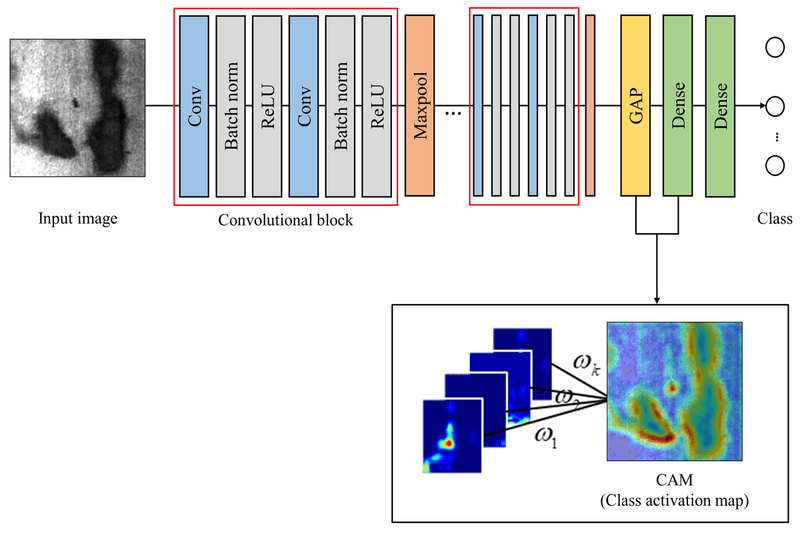

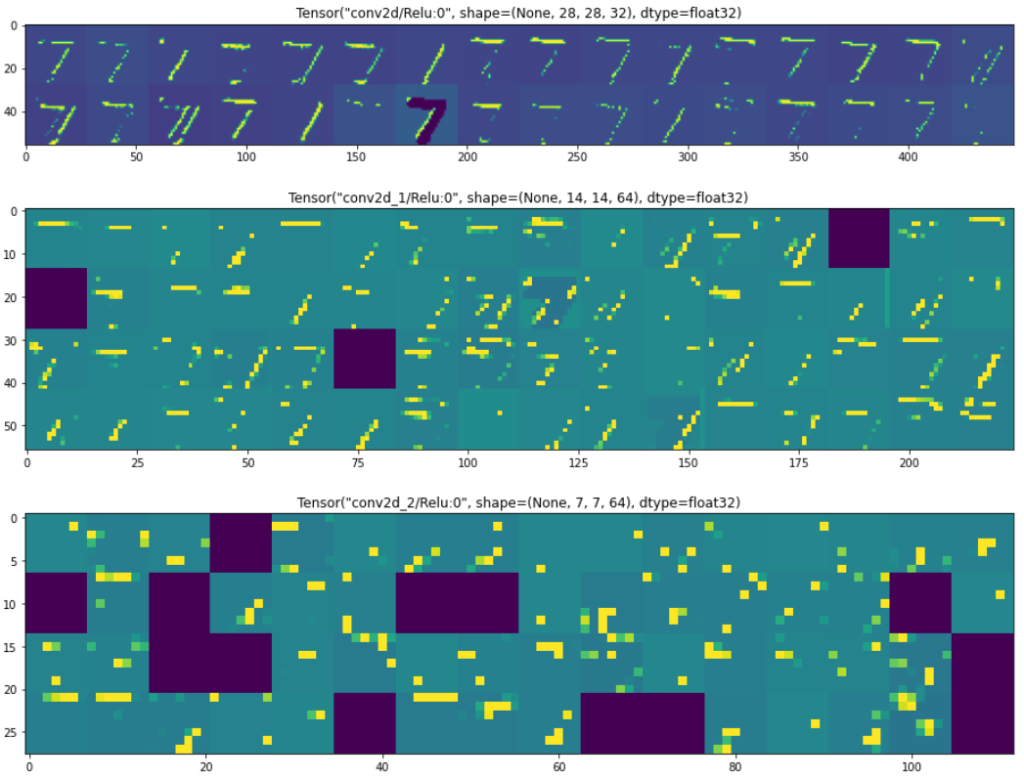

The creation of a CAM involves analyzing the output of the deep learning model’s penultimate convolutional layer. This layer, known as the "global average pooling" layer, produces a feature map that summarizes the information extracted by the preceding convolutional layers.

Each feature map channel corresponds to a specific feature learned by the model. By weighting these channels according to the model’s classification score for a particular class, a weighted sum is calculated. This weighted sum represents the activation score for each pixel in the image, forming the basis for the CAM heatmap.

Benefits of Class Activation Maps: Unveiling the Black Box

CAMs provide numerous benefits, empowering researchers and practitioners to gain a deeper understanding of deep learning models:

-

Model Interpretability: CAMs offer a visual and intuitive way to understand the decision-making process of deep learning models, particularly in image classification tasks. This transparency helps to demystify the "black box" nature of these models, making them more trustworthy and reliable.

-

Model Debugging and Improvement: By visualizing the model’s attention, CAMs can reveal potential biases or errors in the model’s decision-making process. This information can be used to refine the model architecture, improve data preprocessing, or address any underlying issues that might be hindering its performance.

-

Feature Visualization: CAMs provide a visual representation of the features learned by the model, offering insights into the model’s understanding of the image content. This can be valuable for tasks such as object localization, image segmentation, and even understanding the model’s ability to generalize to unseen data.

-

Explainable AI: In domains where transparency and explainability are paramount, such as medical diagnosis or autonomous driving, CAMs can play a vital role in building trust and confidence in deep learning models. By providing a clear explanation of the model’s reasoning, CAMs can facilitate responsible and ethical deployment of these powerful technologies.

Types of Class Activation Maps: A Spectrum of Approaches

While the fundamental principle of CAMs remains consistent, different approaches have been developed to address specific challenges and optimize performance:

-

Grad-CAM: Gradient-based Class Activation Mapping (Grad-CAM) utilizes the gradients of the model’s output with respect to the feature map to generate the heatmap. This approach allows for the visualization of attention even for models without global average pooling layers.

-

Guided Backpropagation: This method uses guided backpropagation to selectively propagate gradients, highlighting only the positive gradients that contribute to the activation. This can help to reduce noise and produce more focused and interpretable heatmaps.

-

Layer-wise Relevance Propagation (LRP): LRP is a more general method that can be applied to various deep learning architectures. It propagates relevance scores through the network, providing insights into the contribution of each neuron and layer to the final prediction.

-

SmoothGrad: This technique utilizes a smoothing approach to reduce noise and improve the quality of the heatmaps, especially when dealing with models that exhibit high sensitivity to input variations.

Applications of Class Activation Maps: Beyond Image Classification

While CAMs are particularly valuable in image classification, their applications extend beyond this domain. Here are some key areas where CAMs can prove beneficial:

-

Object Detection: CAMs can be used to visualize the regions of interest that the model focuses on when detecting objects in an image. This can help to understand the model’s reasoning behind its object detection decisions and identify potential areas for improvement.

-

Natural Language Processing: While not as commonly used in NLP, CAM-like techniques can be employed to visualize the attention of language models, highlighting the words or phrases that contribute most to the model’s predictions. This can be valuable for understanding the model’s reasoning and identifying potential biases in its language processing capabilities.

-

Medical Imaging: CAMs can be applied to medical images, such as X-rays or MRIs, to help radiologists understand the model’s reasoning behind its diagnoses. This can facilitate more informed clinical decisions and improve patient care.

-

Robotics: In robotics, CAMs can be used to visualize the features that the robot’s vision system focuses on during navigation or object manipulation. This can help to improve the robot’s performance and ensure its safe operation in complex environments.

FAQs on Class Activation Maps: Demystifying the Concept

Q: What are the limitations of Class Activation Maps?

A: While CAMs offer valuable insights, they do have certain limitations. One limitation is their dependence on the model’s architecture. Certain model architectures, such as those without global average pooling layers, may require specialized CAM techniques to generate meaningful heatmaps. Additionally, CAMs are not perfect representations of the model’s internal workings, and the heatmaps may not always accurately reflect the model’s decision-making process.

Q: How do I implement Class Activation Maps in my deep learning project?

A: Implementing CAMs requires access to the model’s internal representations, specifically the output of the penultimate convolutional layer. Libraries such as Keras and PyTorch provide tools and functionalities for accessing these internal representations and generating CAMs. There are also readily available open-source implementations of various CAM techniques that can be integrated into your project.

Q: Can Class Activation Maps be used to explain the decisions of any deep learning model?

A: While CAMs are primarily used for image classification models, they can be adapted to other types of models with some modifications. However, their effectiveness may vary depending on the model architecture and the nature of the task.

Q: Are Class Activation Maps a substitute for other interpretability methods?

A: CAMs are a valuable tool for understanding deep learning models, but they are not a substitute for other interpretability methods. Different methods offer unique perspectives and insights, and combining multiple techniques can provide a more comprehensive understanding of the model’s behavior.

Tips for Effective Class Activation Map Utilization: Maximizing Insights

-

Use multiple CAM techniques: Experiment with different CAM approaches to gain a more comprehensive understanding of the model’s decision-making process. Different techniques might highlight different aspects of the model’s attention.

-

Analyze the heatmaps carefully: Examine the heatmaps in conjunction with the model’s predictions and the underlying image content. Consider the context and the specific features that the model is focusing on.

-

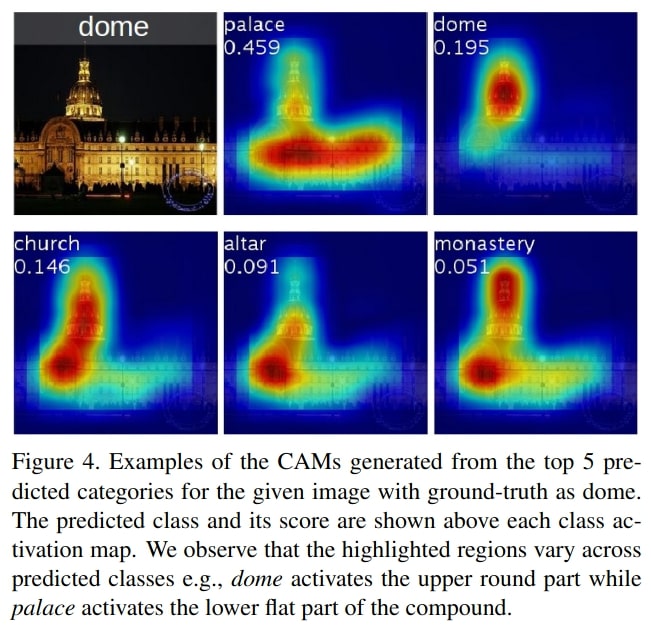

Compare heatmaps across different classes: Analyze how the heatmaps vary across different classes, revealing the model’s ability to distinguish between different objects or concepts.

-

Use CAMs for model debugging and improvement: Pay attention to areas where the heatmaps do not align with expected behavior, as these could indicate potential biases or errors in the model’s reasoning.

-

Incorporate CAMs into your workflow: Integrate CAMs into your deep learning workflow to ensure transparency and explainability throughout the model development process.

Conclusion: Towards a More Transparent Future of Deep Learning

Class Activation Maps offer a powerful tool for understanding and interpreting the decisions of deep learning models, particularly in image classification. By providing a visual representation of the model’s attention, CAMs facilitate model debugging, improve transparency, and enable more informed decision-making in various domains. As deep learning continues to evolve, tools like CAMs will become increasingly important for building trust, ensuring ethical deployment, and maximizing the potential of these powerful technologies.

Closure

Thus, we hope this article has provided valuable insights into Unveiling the Inner Workings of Deep Learning: A Comprehensive Guide to Class Activation Maps. We appreciate your attention to our article. See you in our next article!